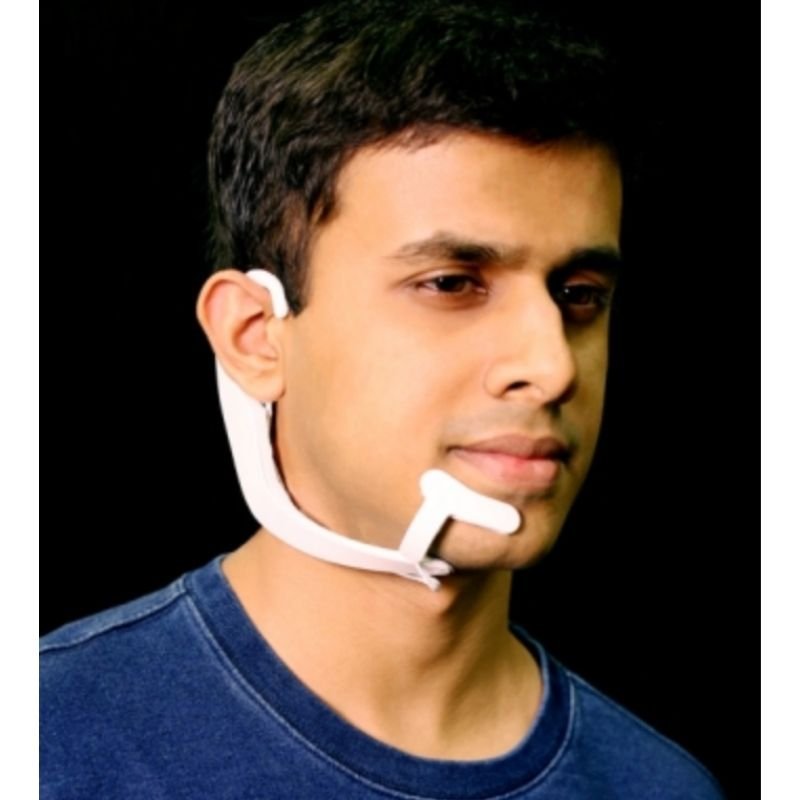

An MIT graduate, Arnav Kapur has designed an AI-enabled ‘mind-reading’ headset. The invention has been named as the best inventions of 2020 by TIME, for, this device will specifically act as a boon for those people who have communication problems like cerebral palsy, ALS. The device will help them communicate with the computer, without saying a word. What they will think, Computer will take that instruction from their mind and communicate it.

Aditya has made it to the list under the experimental category for this device.

About the Project:-

Speaking of the project, Arnav states that how the primary focus of the project is to help support communication for people with speech disorders which include conditions like ALS (amyotrophic lateral sclerosis) and MS (multiple sclerosis). In addition to this, the system has the potential to seamlessly integrate humans and computers, in a manner that computing, the Internet, and AI would weave into daily life as a “second self”. The perfect example to support this theory could be playing chess with AlterEgo on.

How it works?

Let’s say, if you want to know whether it will rain tomorrow or not, all you have to do is ask or formulate that question in your head. The headset’s sensors then read the signals. They are from the areas – facial and vocal cord muscles which would have triggered had you said it out loud. After this, the device carries out the action on your laptop.

You receive the relevant information using a bone conduction speaker. In terms of accuracy, the device stands at 92%.

This device is made on the principle that even when we don’t speak out loud or the thoughts which do not make it to our mouth are still going through our internal speech system, which, facilitate the movement of tongue.

Demonstrating the scope of the innovation, Kapur, in a video navigates a smart TV, finds out the time, calculates how much he spent at the grocery store all without ever uttering a word.

Though, the device will help facilitate communication for the ones who have communication problems, there are few concerns raised on whether it is the first step of machines knowing your every thought and answering that, Kapur states that one doesn’t need to worry about that as AlterEgo doesn’t read your thoughts, just the commands that you specifically articulate in your head and given the fact that it reads signals based on your facial and vocal cord muscles, it doesn’t have any access to your brain activity in the first place.

Currently, the system is not commercially available and is being tested in hospital settings for patients with MS and ALS.